Focus+Context Visualization with Distortion Minimization

Yu-Shuen Wang1, Tong-Yee Lee1,Chiew-Lan Tai2

IEEE Transactions on Visualization and Computer Graphics (Proceedings of IEEE Visualization 2008),

Vol. 14, No. 6, Nov. 2008

1National Cheng Kung University, Taiwan

2Hong Kong University of Science & Technology

¡@

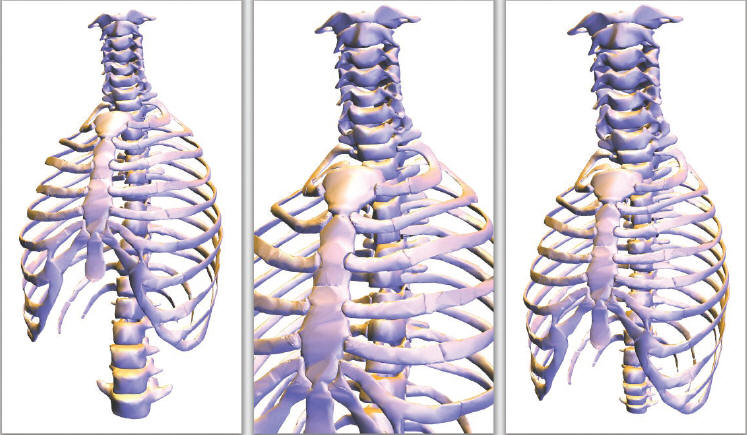

(left) Original view of the thorax model. For a detailed observation of the cervical vertebra, an intuitive approach is to shorten the distance between the model and the camera. However, the other regions, such as the lower part of the spine, will be clipped off due to the limited screen space (middle). In contrast, our method magnifies the focal region whilekeeping the entire model displayed on the same screen (right).